Junior Karthik Sangameswaran lives, loves and breathes history.

Walking into a Blue Day tutorial in Jerry Sheehy’s AP European History and World History classroom, Sangameswaran can often be seen engaged in lively passionate historical debate with Sheehy and other students. Sangameswaran also actively seeks out opportunities to learn more about the subject: He participates in National History Day, History Bowl and even enrolled in two history classes at the local community college.

He was also recently a victim of a false accusation of AI cheating in one of those college history classes.

On April 12, Sangameswaran was stunned to find a comment on his discussion forum submission from his professor of Modern Latin American History at De Anza Community College.

“Multiple AI checkers have revealed usage of ChatGPT in your latest submission. Please rewrite and resubmit on a Microsoft Document,” the instructor said. “Further usage of AI will result in strict disciplinary action.”

To those who know Sangameswaran — an obstinate detractor of all uses of AI — the notion that he may have used AI seems absurd. Nevertheless, Sangamewsaran rewrote his assignment on a Word document with edit history to prove his innocence, given the lack of edit history on a Canvas discussion forum.

To his surprise, the next morning, he found another, more forceful email from the same instructor: “I am subscribed to numerous AI checkers including GPT-zero, and all have revealed high percentages of AI usage. I have formally reported you to judicial affairs. Please stop doing this. It is harmful to your classmates and other students.”

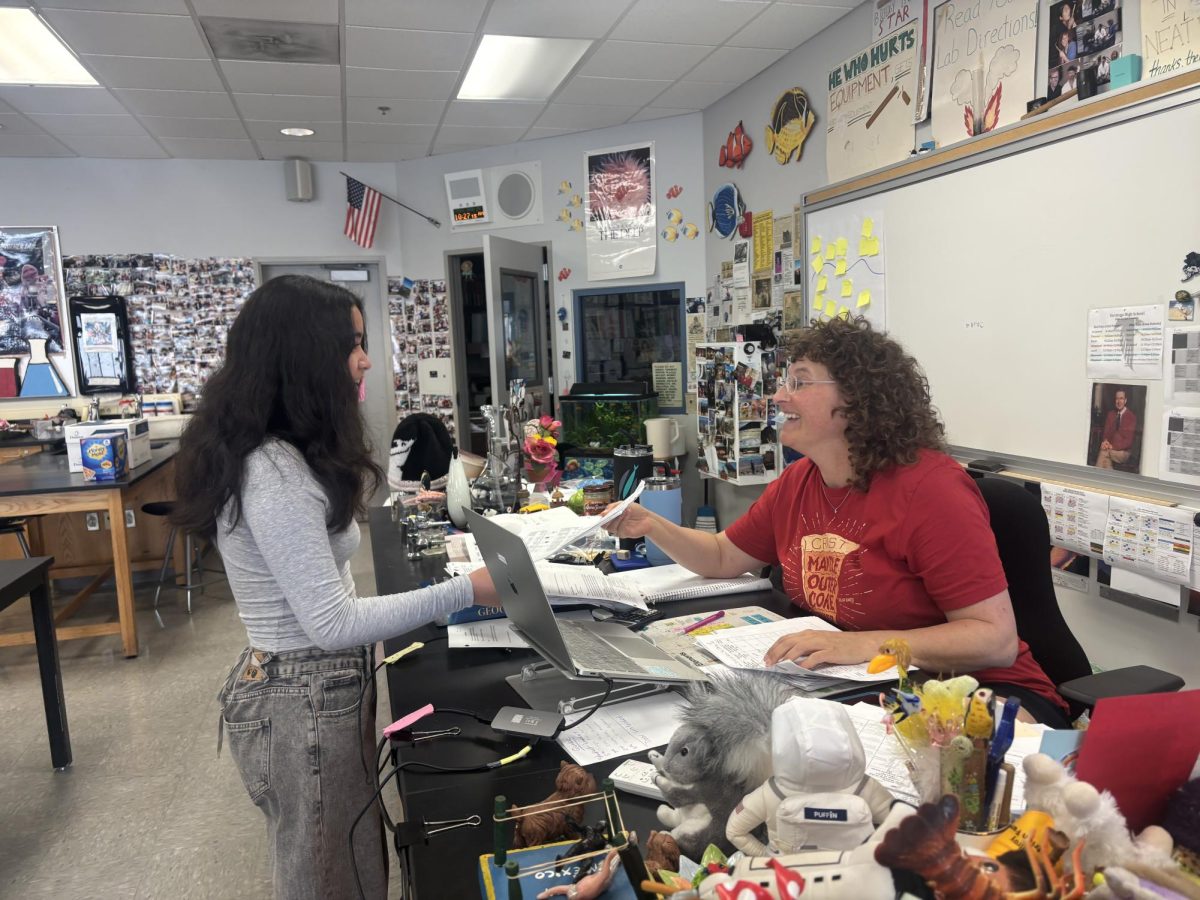

Sangameswaran was even more dumbfounded, especially given the edit history on the Word document vindicating him. In front of a panel of witnesses, including myself and Spanish teacher Stephany Marks, Sangameswaran rewrote his assignment and self-checked for the use of AI. GPT-zero returned a value of 98 percent AI.

It took Sangameswaran more than a week of relentless argument with the instructor to finally prove his innocence. Ultimately, after reviewing the evidence and speaking with Sangameswaran, the instructor decided to drop the charges. However, Sangameswaran shouldn’t have had to fight as long as he did.

AI detectors, which themselves rely on AI of dubious reliability, have a dubious place in the classroom. In a country founded upon the principle of “innocent until proven guilty” where convictions require certainty “beyond the shadow of a doubt,” AI checkers are not accurate enough to be given any credence.

Punishing a student on the basis of fallible AI models is akin to sentencing a criminal to death with only a rumor heard on the street as evidence; it goes against the very principles of American justice. To a student with aspirations to attend a top college, a cheating scandal on their record could permanently tarnish their reputation and significantly jeopardize their chances in the roulette of college admissions.

How do these inaccuracies occur so regularly? AI checkers primarily work by looking at two variables: perplexity and burstiness. Perplexity is a measure of unpredictability in a text; errors, figurative language and creative word choice all increase perplexity, which AI generators seek to decrease. Burstiness is a measure of variation determined by changes in sentence structure and length. In humans, burstiness is often high, and AI models have yet to emulate it.

However, these markers may fail in a variety of circumstances. For a quick analytical assignment completed late at night with the sole intention of “getting it done,” style and variation — often regarded as hallmarks of good writing — fall to the bottom of a students priority list. Many assignments don’t actually require a highly individualistic show of writing skill, leading students to focus on conveying the necessary information as quickly as possible. In these circumstances, an AI detector may easily confuse the work of a tired student with the work of an AI generator.

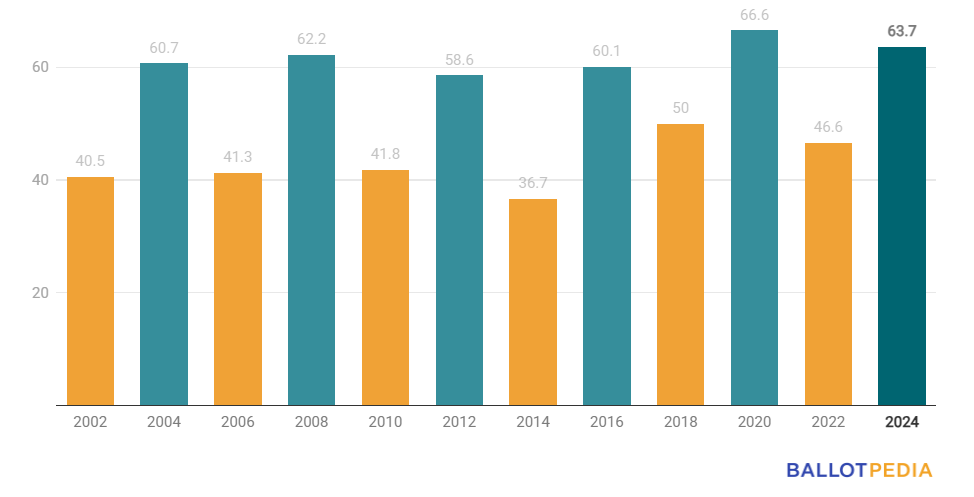

The reliance on AI detectors can lead to any number of issues; in Faith Stackhouse-Daly’s 2022-2023 APUSH class, 53 students were accused of using ChatGPT on AP review assignments. While 28 were handed a referral and a zero, 23 were cleared of wrongdoing after further review by administrators. This begs the question: How were some people cleared and others convicted?

Ultimately, the decision to clear or not clear a student would, presumably, have relied heavily on guesswork. Both AI checkers, and any other tool or interview, must be considered circumstantial evidence that requires thorough followup and no assumption of guilt.

In 2024, an anonymous English 11 Honors student was accused of using ChatGPT on a 30-point major assignment and received both a zero and a referral. According to the student, who maintains their innocence, the main piece of evidence against them was the teacher’s accusation that the student’s submission did not match their typical writing style and some suspicious copy and pastes in the version history. The student claims they used a notepad application to draft their essay, given that they were on a plane.

In such a situation, the decision of whether to punish the student is a difficult one: The evidence, especially copy and pasting from the notes app to the Google Doc, weighs heavily against the student. Simultaneously, the teacher presented no hard, indisputable evidence to corroborate the claim of cheating. When presented with only circumstantial evidence like this, teachers and administrators should err on the side of caution instead of writing referrals for plagiarism.

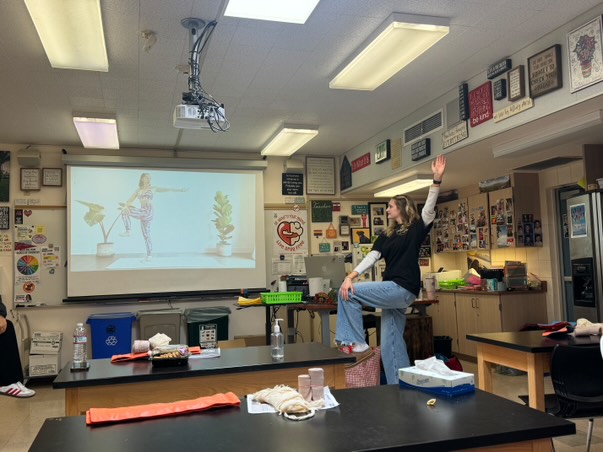

One possible solution to the ambiguous environment of the digital age is a shift to in-person essay writing. In person essays, at least for a first draft, remove two major issues plaguing classes: the use of ChatGPT and the pervasive use of private tutors. Even outside of the use of ChatGPT, many students have English tutors who substantially edit student work or contribute heavily to the formation of student ideas. In-person essays allow teachers to verify that a student’s work is representative of their own abilities and free of outside influences. Another possible hurdle that could be placed in a cheating student’s path is the necessitation of hand-written essays. By requiring a handwritten first draft, the convenience of copy-pasting from ChatGPT is disrupted, which may convince some students to use their own work.

The digital age is one of mind-boggling confusion, whether it’s the increasingly lifelike AI generated videos or surprisingly realistic student essays. But when certainty is impossible to guarantee, the benefit of the doubt should go to the student. After all, a wrong accusation can result in life-changing consequences such as a flawed college application.

A wrongful accusation casts doubt on a student’s integrity, which for students, like Sangameswaran, is a prized and inviolable possession. Ultimately, educators trying to maintain standards are in a tough position, but they must operate with one of our most fundamental beliefs at the forefront of their mind: innocent until proven guilty.