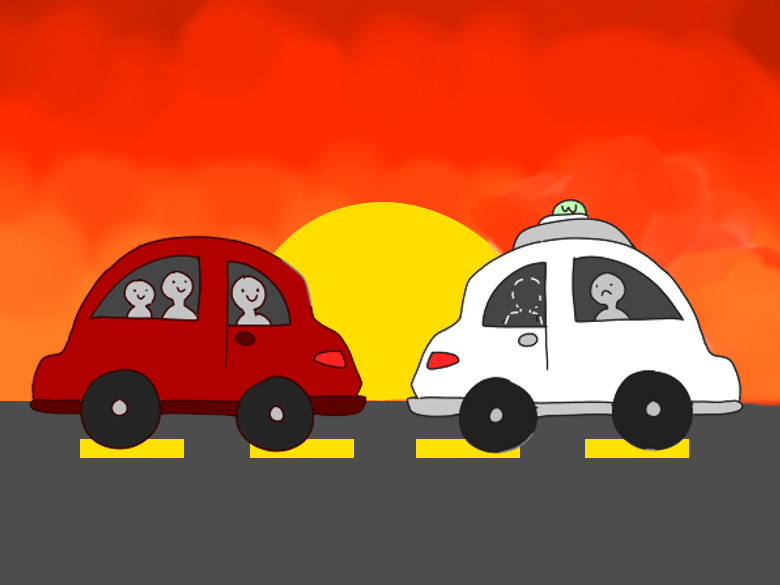

Growing up, we found the idea of self-driving cars to be eons away, a sci-fi creation that perhaps our children or grandchildren would experience. But drive around San Francisco these days and you’ll see more and more self-driving Waymo vehicles, and you realize the roads certainly feel like the future we thought was far off.

In 2009, Google began developing its self-driving technology using artificial intelligence design.

Waymo, formerly the Google self-driving car project, is now “the world’s first fully driverless ride on public roads.” The car uses hardware-enhanced sensors such as cameras, radar and lidar to detect cars, pedestrians, traffic lights and road conditions. According to the company, the purpose of a self-automated car is to increase road safety by reducing the chances of human errors in driving.

Self-driving cars have shown that they can be perfectly safe, with an 85% reduction in crash injuries. In 2023, Waymo reported only three crashes with injuries over 7.1 million miles, about twice as safe as the average human driver. According to crash witnesses, the crashes were likely due to human error of the other vehicle and not because of the robocar itself.

Despite the statistical evidence proving Waymo’s safety, we wouldn’t trust Waymo to drive us around. Waymo cars have frozen under directions and crashed on multiple occasions. In December 2023, a Waymo taxi hit a tow truck and suffered damages. While the company claimed that the software had been faulty and is now fixed, the possibilities are daunting. If the software has shown unreliable results before, there are probably dozens of other issues to work out and so much unpredictability on the roads that trusting the vehicles is a lot to ask.

If similar situations happened with a human driver, the driver would be able to stop before it hit another car. A fully automated car is different from a taxi driver because once one gets in the car, one talks to a machine, which might misunderstand directions, instead of a human.

In addition, it feels kind of dubious when our lives aren’t even in our own hands; the only control is from a car that has a different set of limits and programming than that of a human. Self-driving cars follow stiff constraints when faced with road emergencies that could result in crashes, while humans know how to adapt to emergencies and have the common sense to make the safest decision, not the most correct one.

It’s not only the safety that worries us, though; Waymo rides have been proven to avoid freeways and stop far from the correct location. The car rides end up being longer because Waymo only navigates the safest routes, avoiding left turns and traffic. While we should applaud the efforts for safety, it’s simply not convenient if one wants to get somewhere quickly.

Self-driving cars are the future, but we’re not on board yet.