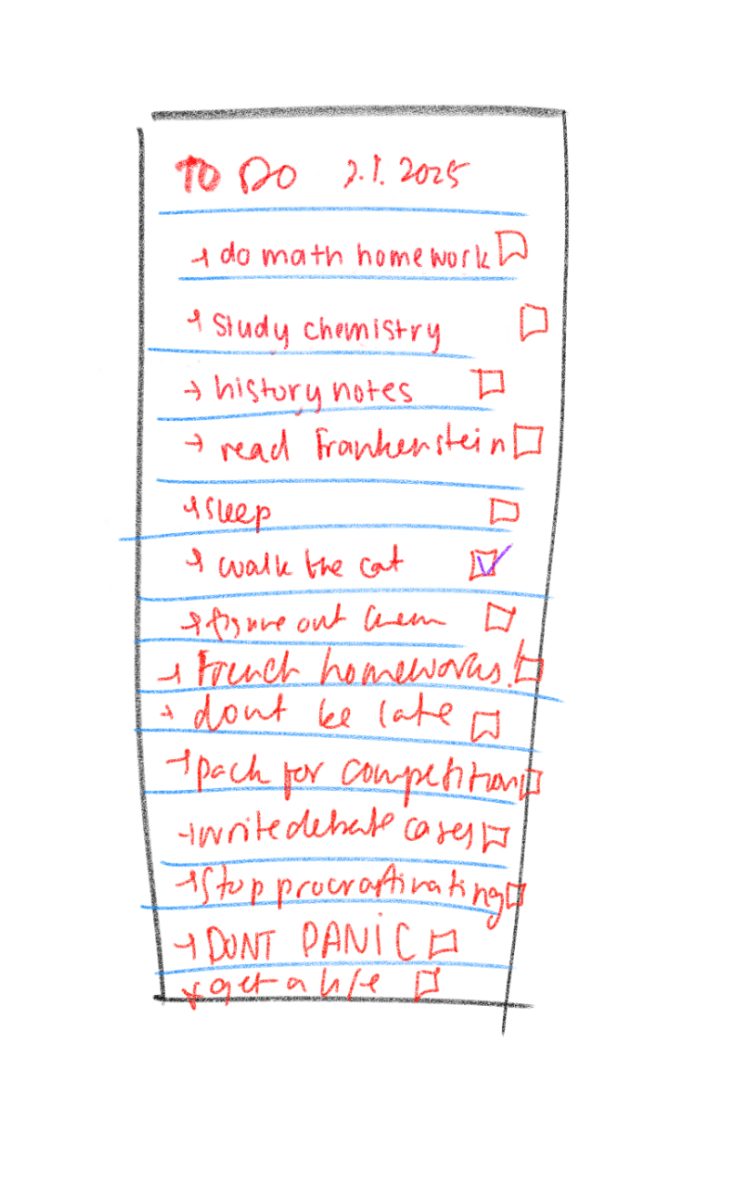

Hours deep into our homework, we searched for some kind of vice to indulge in, only to remember that we had signed up to write this article.

Logging into the ChatGPT interface, we began peppering it with various greetings and phrases we would use in real life such as asking: “What’s up?” The bot’s response was an automated message back that was pretty devoid of any emotion: “Not much, just here to help out. How about you? What’s on your mind today?”

In the next 20 minutes, we bantered with ChatGPT, trying everything from cheesy pickup lines to funny jokes, hoping to evoke any kind of human emotion. To our disappointment, it simply did not work. So, on our mission to extract any sliver of emotion from AI, we quickly changed to DeepAI chat, another online chatbox, made specifically for conversations.

“Hey, Pookie,” we typed into the box, hopeful for genuine response. Unfortunately, we got the same mechanical response: “Hey there, how are you doing?”

So much for our hopes of having an actual AI-generated friend.

Despite our disappointment, we looked further into the popularity of bots and found that 87.2% of consumers rate their interactions with them as either neutral or positive.

The great thing about conversations with chatbots is that they can go on for hours and hours — all with no awkwardness, no stress, no risk of rejection. In other words, they allow users to practice trying to have genuine human connections. But like anything else, this useful function can go too far.

Some people report using it for romantic roleplay and end up creating fictional girlfriends that people they form intense attachments to. If you think about it, that’s extremely messed up. It’s basically fetishizing a website.

And, of course, when people are finally done with their AI-generated conversations and return to their complicated real-life relationships, the differences are stark. If we said “Hey, Pookie,” to anyone else on campus (other than a boyfriend or girlfriend), we’d probably get an intense side-eye. No one — and we mean NO ONE, would have replied as the chatbox did.

This is problematic for society.

Chatbots make it seem like they understand us, when, in fact, they do not. The emotional connection is fake, and there is no validation to the conversations. The whole conversation is simply surface–level responses, invalidating real human connections.

For some, using chatbots can become an addiction. Even deep questions are limited to basic robotic answers. When prompted with “What’s the meaning of life?” The AI responded by giving a super rambly answer. The algorithm used to create such speech bubbles limits their responses to boring responses, ultimately removing the actual human connections people need.

Our conclusion from this experiment: We would not recommend using chatbots to find friendships. Bots’ responses can be funny, yes, but nothing can replace the raw human-to-human connections we create day to day.