“Desktop to web to phones, from text to photos to video. But this isn’t the end of the line. The next platform and medium will be even more immersive, an embodied internet where you’re in the experience, not just looking at it,” Mark Zuckerberg said to viewers during the virtual Facebook Connect 2021 keynote on Oct. 22. “We call this the metaverse.”

After announcing Facebook’s rebranding as Meta, Zuckerberg spent the majority of the hour-long keynote exploring Meta’s plan to usher in a new internet era where screens are replaced with headsets and smart glasses: a resplendent space of unfettered human interaction in a virtual world packed with features to match our physical one.

In the speech, Zuckerberg failed to address the dangerous issues that have plagued his company over the past few years. Buried in a Congress investigation, leaked internal reports and whistleblower testimony for user data abuse, Facebook has lost its credibility due to its deception and indifference to the consequences of its policies and actions.

Enter Meta, a strategic rebranding effort that rises to take Facebook’s place, and introduces the metaverse in an attempt to divert attention and resources from the problems at hand.

The company’s sudden emphasis on the all-encompassing virtual space shows yet again that Meta aims to exacerbate its platforms’ progressively disastrous effects on young users over the last decade. The most glaring problem with Meta’s plan to further the extent of internet usage is its impact on the physical and mental wellness of today’s youth as well as subsequent generations that will develop a dependence on the internet.

Whether it’s sex abuse or mental health issues, the data all point to a single, insidious conclusion: Facebook hasn’t done enough to keep children on its platform safe, choosing astronomically high profits over the safety and wellbeing of its most vulnerable users. And that begs the question: Why is it a good idea for users to trust the rebranded company with introducing an immersive, VR world when they have failed to solve existing problems?

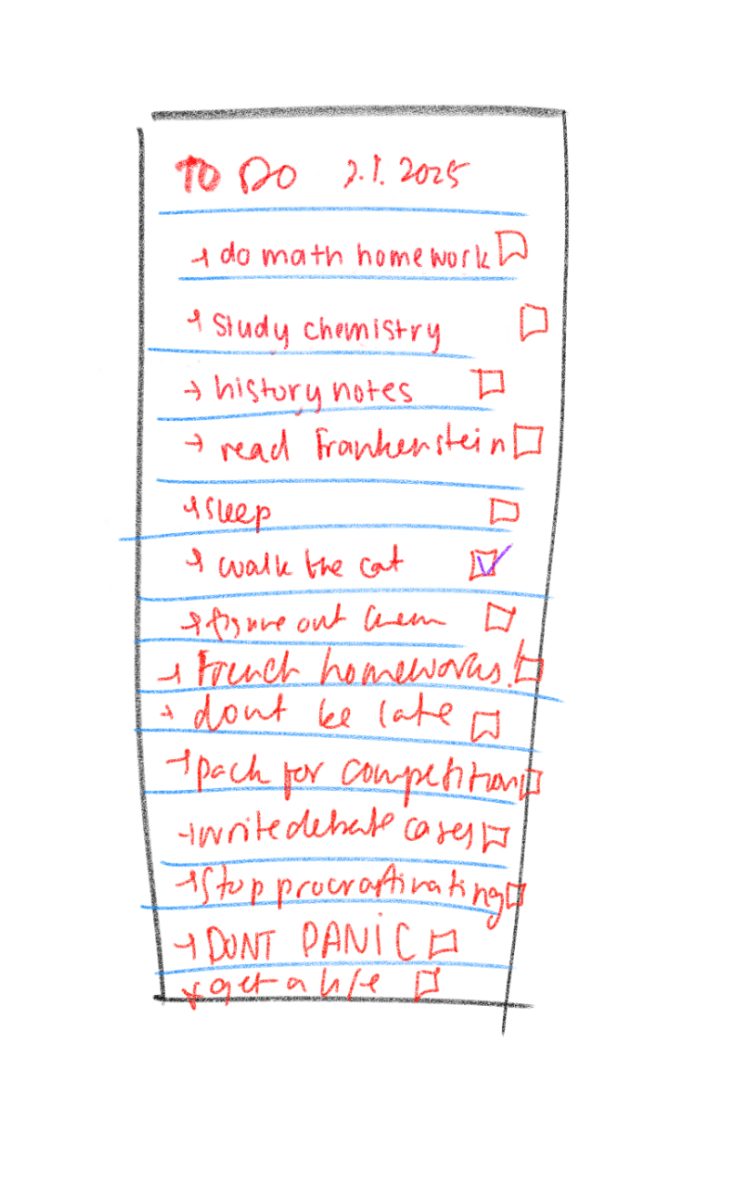

According to research by the Center for Disease Control (CDC), people under age 18 are spending more time than they should in front of screens — screen time averages about six hours a day for youth ages 8 to 10, nine hours a day for those ages 11 to 14 and seven to eight hours for older teenagers ages 15 to 18.

Meta’s shift to virtual reality (VR) has arrived at a time where they are losing a key demographic of internet users: teenagers. Young people are increasingly gravitating away from Facebook in favor of apps like TikTok and Snapchat — while Instagram is still relevant among younger users, most of Meta’s ad revenue comes from the Facebook platform.

However, an overwhelming majority of teenagers have demonstrated in surveys that they would look forward to using virtual reality (VR) platforms regularly. Based on current user statistics, it is logical that Meta would use the allure of a VR platform to entice younger demographics into using their products.

Meta touts the idea that the amount of time we would spend in their all-immersive metaverse is actually beneficial — in Meta’s hour-long promotional video, Zuckerberg claimed, “This isn’t about spending more time on screens. It’s about making the time that we already spend better.”

This is an easy thing to say for a company whose multi-billion-dollar profit model is based on how much time users spend on their platforms. For the users themselves, however, the effects are often adverse — mentally, physically and safety-wise.

For far too long, ethical considerations have taken a backseat in tech. Whether it’s a child trafficked because of Facebook’s relaxed security measures, or white supremacist violence fomented by failure to execute their own anti-hate-speech policies, there are ugly consequences.

Whistleblower Frances Haugen revealed the scale of harm Meta’s platforms have caused to vulnerable minors, notably that Meta knew of these effects but chose not to act on the knowledge to protect children. For instance, Haugen released information showing that Facebook researchers were aware of these dangers from an internal study where 32% of teenage girls surveyed reported that when they felt bad about their bodies, their Instagram feeds made them feel worse — inevitably leading to higher rates of eating disorders among the demographic.

A report by the industry watchdog Tech Transparency Project (TTP) showed that Facebook chose not to filter ads that involved the promotion of drug and alcohol use, disordered eating, online dating and other high-risk activities. The study concluded that these ads reached up to 3 million teens. Later, Facebook pledged to limit this content, but the company’s lack of safeguards revealed that their priorities lay with ad revenue instead of safety.

The company has also exhibited a dangerously lax approach in preventing the sexual exploitation of minors online. A different analysis by the TTP reported that between 2013 and 2019, there had been 366 different federal criminal cases — child pornography, grooming and soliciting minors and sex trafficking initiatives — that took place on the platform. Despite Meta’s pledge to eradicate the rampant sexual exploitation, data from the Justice Department shows that the cases are steadily increasing rather than decreasing.

Possibly one of the most alarming incidents of Meta’s indifference occurred when the company vehemently fought against legislation that could help protect minors on its platform — the 2017 FOSTA-SESTA acts that would hold the company liable for knowingly facilitating sex trafficking or exploitation of minors. That year, the company spent over $3 million more on lobbying in opposition to the bipartisan bill.

The inevitable advancement of VR is only going to bring up a new set of ethical and safety issues. A paper by Mary Anne Franks, president of the Cyber Civil Rights Institute, for example, indicates that abuse is far more potent and traumatic within a VR space than through a screen. There is exhaustive research available spanning almost every possible risk of conventional social media, but research on long- and short-term effects of VR is still developing. However, certain trends have been established — in one instance, children who were a part of a research study about VR use showed an overwhelming tendency to become dependent on the technology over a small amount of time. This would inevitably worsen the issue of tech addiction.

It isn’t difficult to imagine the numerous ways that people could make use of VR to increase their abuse. If a child can be groomed over a screen without ever seeing the face of their abuser, it would subsequently be much easier and more gratifying for the abuser to groom them in a space where they can interact “face-to-face.”

The same spaces that could serve as virtual workplaces could also serve as virtual meeting places for far-right groups forming cross-country coalitions. The ease of meeting in VR would pose an even greater danger — especially considering the rise of teenagers falling into alt-right internet pipelines.

These issues aren’t exacerbated due to VR itself — rather, Meta’s disgraceful lack of an ethical compass is the culprit. If VR spaces were well moderated with user safety, privacy and well-being at the forefront of a company’s priorities, these problems would be much less prevalent. Meta, however, is a company that has constantly ignored the importance of keeping vulnerable users safe in favor of increased ad revenue.

Risk-taking is a core tenet of successful technology companies. But in most cases, companies tend to hedge their bets at risk to themselves, not their users. Meta plays a dangerous game by choosing to risk lives in order to gain control over the future of VR as quickly as possible.

VR is undoubtedly going to be a big revolution in technology. It will have its own benefits and pitfalls. But the difficult questions aren’t about what problems VR, in general, will hold. They are about who we want to trust with our safety, privacy and wellbeing all at stake in a rapidly-changing technology scene. Based on the underlying issues with Facebook that have recently been brought to light, the answer to those questions is resoundingly clear: It should not be Meta.