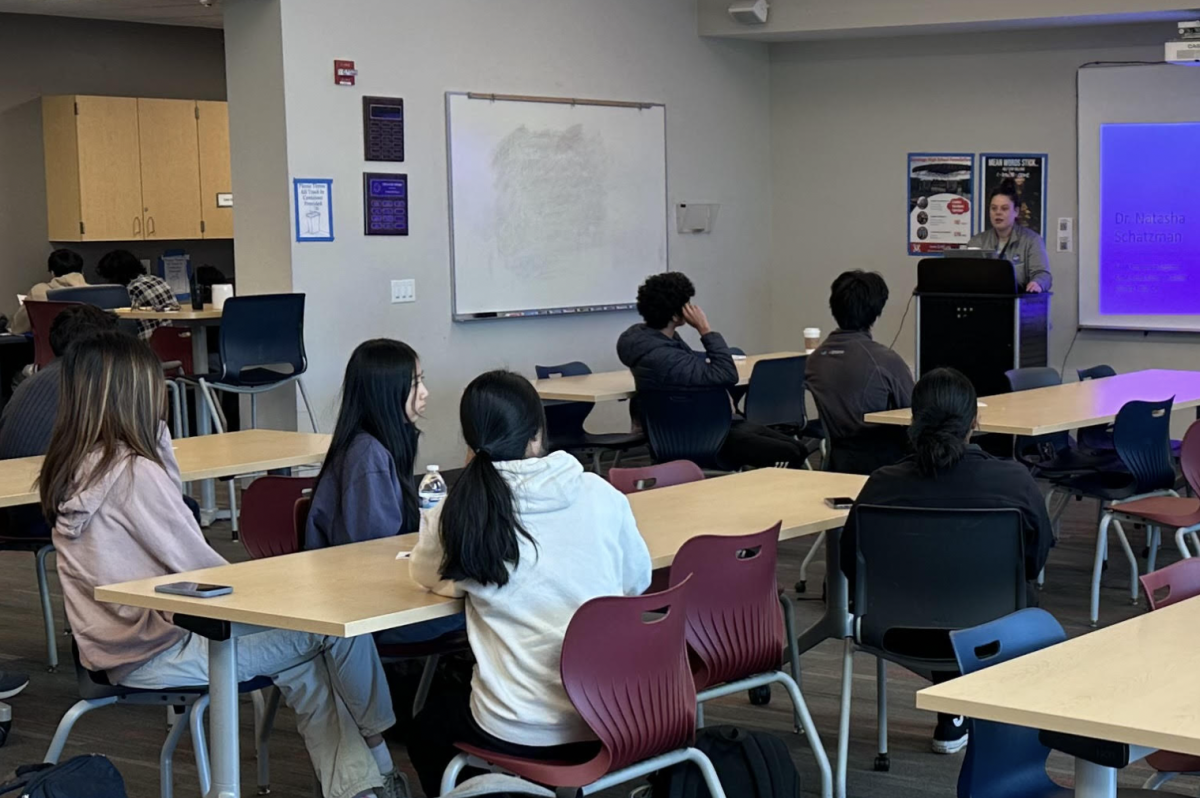

Juniors got a sneak peek into a new era of computer-based testing that requires more advanced thinking skills when they took the Smarter Balance training tests during the week of April 21.

The tests gave students and teachers an opportunity to become familiar with the software and interface that will be used in future Common Core tests.

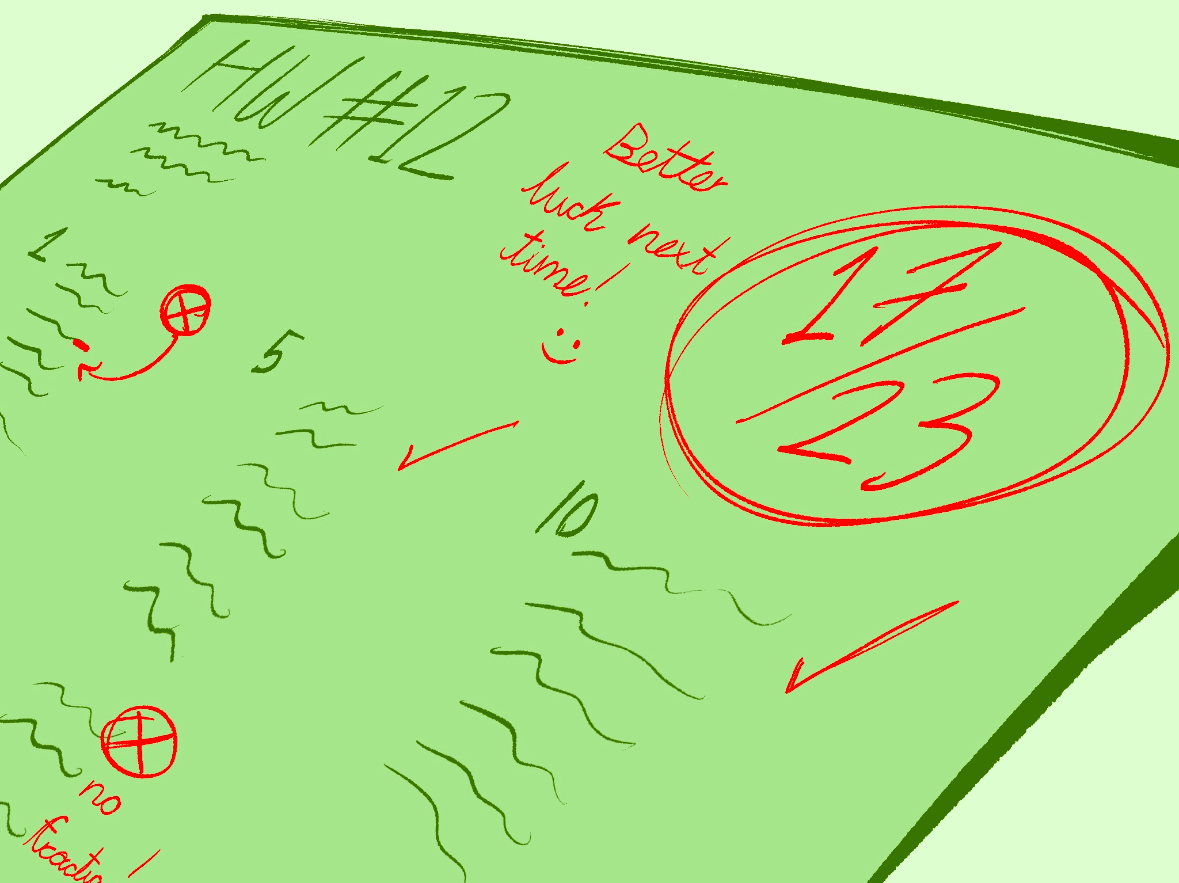

The first part of the test consisted of a reading and a mathematical portion. A second mathematical portion was taken after a group lesson taught by math department chair Debra Troxell. This second math portion was made up of free-response questions.

“[I wasn’t] really proctoring, but leading a half-hour lesson that [preceded a] multi-step math problem, much like free response, to explain what the problem needs to analyze,” Troxell said. “The purpose of the discussion is to get everybody on same page as far as understanding and terminology. Something more tricky would require more discussion.”

When comparing her experiences proctoring the STAR test with her experiences teaching the group lesson, Troxell noticed that the Smarter Balance test requires a lot more involvement from test takers. Troxell also said the questions required more thinking than from normal multiple-choice questions.

Assistant principal Brian Safine noted the new test requires more flexibility and more thorough answers from the students, but said the test has some unexpected problems such as timing.

Short answer questions in the morning took longer than expected while the performance-based questions in the afternoon took less time than expected, Safine said.

According to Safine, potential problems when the test is implemented include how to accommodate the increased number of test-takers and whether the computers in the library’s research center would be useful because of screen placement, which forces juniors to look down.

The test received mixed reactions from juniors. Junior Alex Yeh thought it was better because of the increased amount of thinking required.

“The test required a lot more thought than the STAR test and was more related to the material we [learned] in school, such as [supporting an] answer with text and [writing] an intro paragraph,” Yeh said. “You also needed to read a lot more carefully for some of the problems, whereas on the STAR test the problems were easier and more direct.”

Yet, according to Yeh, the math sections were both difficult and confusing to complete on the computer while the problems were easier on the STAR. He would have preferred to use his own scientific calculator to solve the problems.

Junior Kevin A. Lee believes the new test was better than the previous STAR test in some aspects, but feels there is still much work to be done to improve it, including separating the English and math sections and making the math lesson more useful.

“[The test] still has a whole bunch of kinks,” Lee said. “The fact that they made you write out answers and made you actually think instead of mindlessly filling out bubbles [was challenging] because it is easier to guess than to actually work through problems.”

For his part, Safine thinks the test was a huge success, with no technical problems reported.

“We’ve been really pleased with technological interface,” Safine said. “Most students begin testing within a minute of sitting down. We’re pleased with bandwidth and the heights of flexibility of managing students.”